Ambient and Abundant: Intelligence Beyond the Scaling Era

By Harrison Dahme, CTO and Partner at Hack VC

Executive Summary

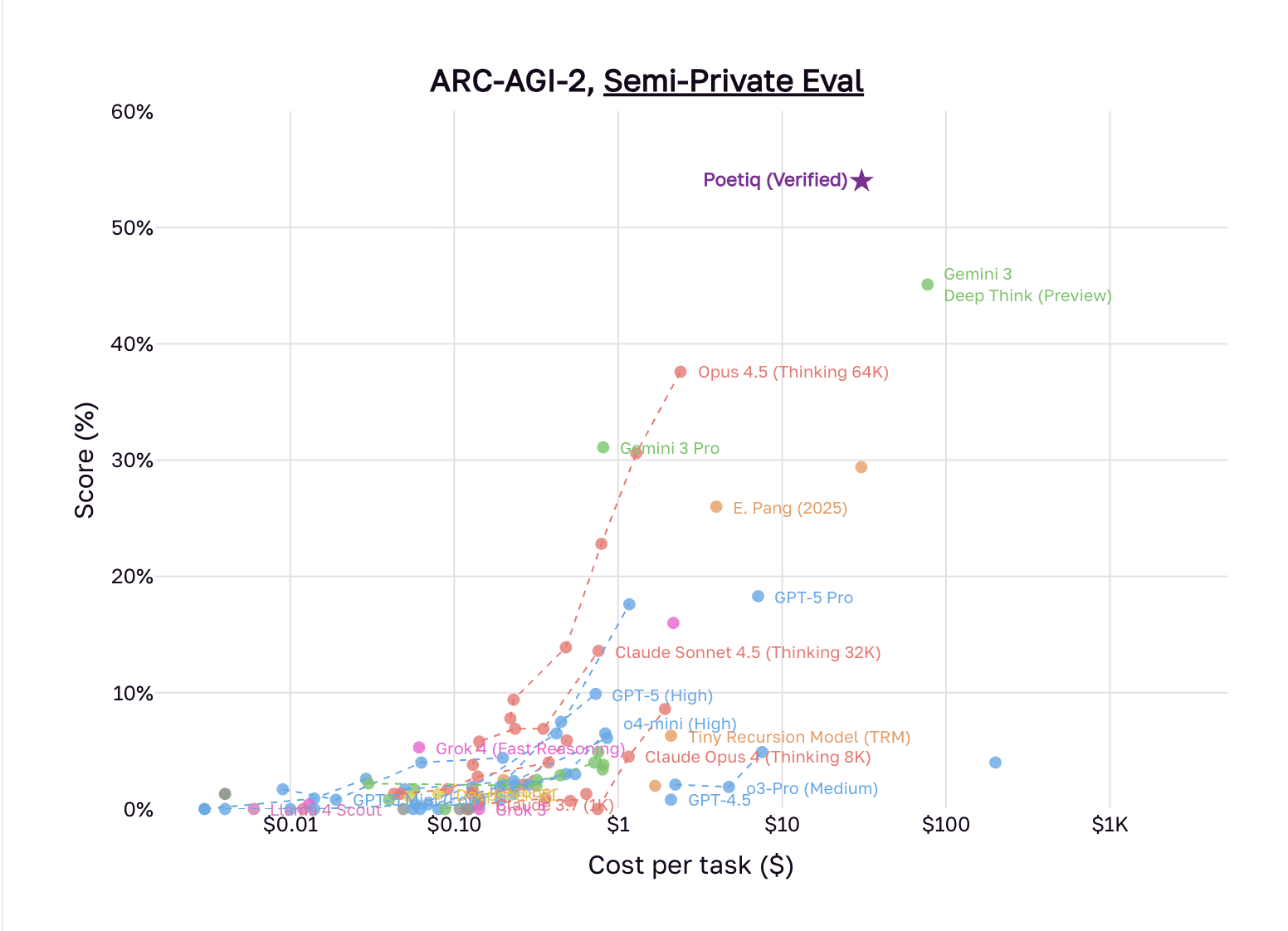

The AI landscape is approaching a significant inflection point. Recent signals - such as including Ilya Sutskever's observation that "the scaling era is over; we're back in the era of research" - and benchmarks of ARC-AGI-2 performance vs. cost suggest that the current modality of scaling transformer models for better performance is experiencing diminishing intelligence returns.[1]

ARC-AGI-2 benchmark: a rough approximation of the cost per unit of intelligence for each model.

The analysis in this essay examines:

- Why the transformer architecture represents a local maximum, not a global optimum

- The emerging architectures that promise orders-of-magnitude efficiency gains

- Historical patterns from previous general-purpose technology transitions

- Where value is likely to migrate as cognition becomes cheap and ubiquitous

- Investment implications across the stack

The central thesis: when reasoning becomes commoditized, value migrates to trust, orchestration, and the physical world. Trust infrastructure as we see it, is crypto. Cryptography, incentive structures, and consensus mechanisms. We have more writing on the subject here. The winners of the next decade will not be determined by who owns the most GPUs, but by who controls the new chokepoints in an ambient intelligence stack.

We start off technical so as to lay the foundation and provide grounding, but we shift to more of an investor and historical analog pretty quickly, and finish with where we see durable value creation happening. Also, none of the following is investment advice, nor is it the opinion of my firm, Hack VC. This is one of many possible futures.

1. The Transformer Paradox: Optimal for Hardware, Suboptimal for Intelligence

The transformer architecture has dominated AI development not because it represents the optimal approach to machine cognition, but because it maps efficiently onto existing hardware constraints.

The Hardware Path Dependency

The core operation powering large language models (specifically multilayer perceptrons, or MLPs) is matrix multiplication. This is embarrassingly parallelizable. Decades of linear algebra optimization from gaming GPUs, the maturity of CUDA, and accessible frameworks like PyTorch created an environment where the transformer's attention mechanism was buildable at scale.

This represents a common pattern in technology development: solutions emerge not from first principles optimization, but from the intersection of theoretical possibility and practical constraint. The transformer architecture reflects how GPUs prefer to process information, not necessarily how intelligence is most efficiently manifested, nor how it actually emerges.

Biological Contrast

The brain - the only existence proof for general intelligence - operates on fundamentally different principles:

- Sparse, event-driven activation rather than dense matrix operations

- Dynamic topology with synapses and neurons, rather than fixed weight matrices

- Energy efficiency measured in watts, from the human body, not megawatts

- Even an infant develops a continuous world view and an understanding of causality, whereas today’s LLMs truly struggle - see our writing on physical manifestations of synthetic intelligence, aka “robot brains.”

This suggests the current paradigm represents a local maximum -- an impressive one that produces systems like GPT-5 and Claude, but a local maximum nonetheless.

2. The Architecture Renaissance

The past 18 months, skewing more recently, have seen a proliferation of fundamentally new approaches, each designed around efficiency in the learning modality, rather than scaling. In our opinion, these are all more elegant solutions than linear algebra pushed to its extreme. It is also our opinion that the universe converges towards elegance and simplicity.

Liquid Neural Networks (MIT/Liquid AI, Fractal)

- Neurons with behaviors governed by differential equations rather than static weights

- Dynamic adaptation over time, inspired by biological nervous systems

- Production models demonstrating 1,000x parameter efficiency on certain tasks

Continuous Thought Machines (Sakana AI)

- Iterative refinement architectures where reasoning unfolds over continuous steps

- Self-referential loops enable models to recognize and improve their own reasoning

- Darwin-Gödel inspired approaches to metacognition

State Space Models (Mamba, RWKV)

- Linear complexity with sequence length (vs. quadratic for transformers)

- Recurrence without traditional RNN training pathologies

- Order-of-magnitude inference efficiency gains

Thermodynamic Probability Modeling (Extropic, Normal)

- Scalable probabilistic computer, whereby the machine thinks in statistics vs. bits

- Probabilistic circuits that perform sampling tasks using orders of magnitude less energy than the current state of the art

- New generative AI algorithms for the codesigned hardware that can use orders of magnitude less energy than existing algorithms

More traditional Mixture-of-Experts at Scale (DeepSeek, MiniMax)

- Sparse activation where only relevant experts engage

- DeepSeek R1 achieved 96% cost reduction compared to comparable frontier models

- The architectural equivalent of selective attention

Information Lattice Learning (Kocree)

- Semantic topology vs. token co-occurrence statistics

- Higher levels of abstractions, offering interpretability at order of magnitudes lower cost

Neuromorphism, Nested Learning, and Biomimicry (Opteran)

- Learning from evolutionary biological systems vs. minimizing error over a complex probabilistic distribution

- Specialized circuits and graphs for specific functions, small and fast, with short planning windows that can adapt organically to a live environment

- Behavioural rules vs. learned patterns

Weird and wonderful Hyperbolic and Manifold Structures

- Non-Euclidean representation spaces where hierarchical structures embed naturally

- Better topology matching for relational and semantic knowledge

- We’re being deliberately vague here so as not to give anything away

These architectures share a crucial property - orders of magnitude fewer mathematical operations per unit of useful cognition. We propose we call these units Shannons, the information-theoretic equivalent of FLOPs per bit of actionable knowledge. Fewer FLOPs translate to fewer transistors switched, fewer watts consumed, fewer dollars spent, and more relevant surfaces for intelligence.

We don’t have strong opinions on which one will win eventually, as they’re all roughly in the same problem space, grounding in stochastics - we think that winning solutions will be determined by who’s good, fast, and cheap enough, with good enough distribution partners, such that model and hardware co-designers commit to a steep developer experience and capital learning curve. Almost certainly though, an intermediate step is utilizing the existing form factors.

3. The Market Dislocation

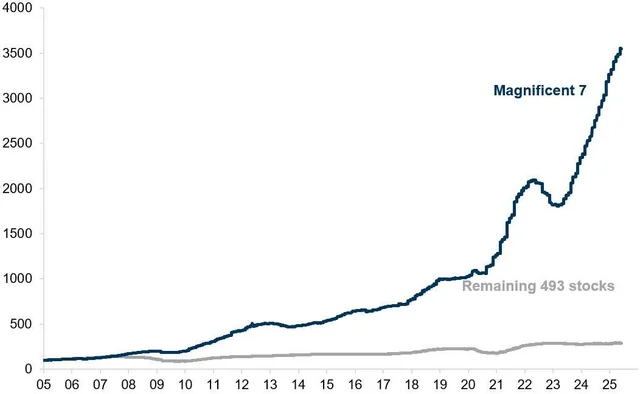

SPY without MAG7. X axis is year (ie., 05 is 2005).

Current market pricing, all the way up to the S&P 500, reflects a specific assumption: perpetual scaling of the transformer paradigm. This manifests as:

- Multi-hundred-billion-dollar capex commitments from hyperscalers

- Sustained GPU demand projections at current architecture specifications

- Power infrastructure buildouts designed for transformer-scale compute

- Valuations premised on continued compute constraint for a decade or more

The Efficiency Frontier Problem

Research and software improvements are orders of magnitude faster and cheaper than infrastructure deployment. If next-generation architectures deliver 100-10,000x compute efficiency gains (as early results suggest), significant infrastructure investment may be misallocated. This has historical parallels, such as the over-investment in canal infrastructure immediately before the railroad era, or overinvestment and overvaluing of transatlantic fiber optic cables in the earliest days of the internet.

The Market is Not the Economy

Additionally, several metrics suggest dislocation between market pricing and underlying fundamentals:

- Declining job postings alongside rising equity indices

- Accelerating open-source catch-up (DeepSeek R1 reaching near-frontier performance at 96% lower cost)

- Research timelines compressing (novel architectures moving from paper to production in 3-5 years vs. the decade-long transformer development cycle)

- Scary amounts of consumer debt per capita and delinquency rates per the FRED datasets

What happened in November of 2022?

Twitter, Burry, and Wall Street love to talk about how we’re in an AI bubble. And we think they’re syntactically right, just not semantically right. If this thesis holds, then it follows that the economy and market will briefly synchronize while the market realizes the extent of the overspending on capex - and crucially labour will no longer be an input to capital. Instead input costs will be correlated with the compute required to replace that labour, before intelligence sufficiently diffuses and boosts firms that are able to build trust moats (discussed later). Whether we’re able to distribute the abundance in a way that’s cohesive with the fabric of society is an entirely different and separate governance question which we don’t explore here. We think cryptography is the crux, but first let’s explore some historical parallels.

4. Historical Precedents and Patterns

An analysis of previous general-purpose technology transitions reveals a couple consistent patterns. The first is Jevon’s Paradox, or that as the unit cost of a lumen or a watt came down, we actually saw rapidly increased usage and applications via diffusion. The second is that value first accrues to the infrastructure providers before platforms emerge, which allow for vertical reorganization, which finally end in consumer capture. There’s no reason to think that intelligence will be any different.

Winners at each phase are often different companies. Railroad barons did not become automobile magnates. Telephone monopolists did not capture the internet. Mainframe manufacturers did not dominate personal computing.This is a common case study in business schools. The technology changes; moats erode unless firms proactively cannibalize themselves.

It’s worth noting that new technologies rarely arrive in their final modality. We first take familiar mental models and form factors to abstract away the technology (e.g., road and car widths being directly correlated with how wide two warhorses were when pulling a roman chariot, computers with desktops and recycling bins, directory structures, etc.). This begs the question of what the true form factor of generative AI will look like. We think that text is a clumsy interface to express intent.

5. Defining Abundant, Ambient Intelligence

Abundant, ambient intelligence has three characteristics.

1. Cheap

The marginal cost of cognitive operations (document drafting, data analysis, code generation, contract review) approaches zero. The primary cost becomes infrastructure, not usage -- analogous to the cost of a lumen of light after electrification.

2. Ubiquitous

Intelligence becomes available wherever sensors or interfaces exist: mobile devices, vehicles, productivity software, household appliances, manufacturing equipment, API endpoints. Cognition is embedded in existing surfaces rather than accessed through dedicated interfaces. Intelligence requires a marginal increase in power and hardware, and so diffuses ubiquitously.

3. Contextual

Systems maintain persistent memory, identity, and environmental access. They act on behalf of users rather than merely responding to queries.

When these thresholds are crossed, the implications extend beyond task automation to fundamental restructuring of firms, labour markets, and value distribution.

6. Productive Friction and Value

So if the research directions being explored currently are viable, and if we see the diffusion of abundant, ambient intelligence, then reasoning and execution will commoditize, and value migrates to what remains scarce. Friction tends to be a great attractor for value.

Human Elements

As AI capabilities improve, certain non-scalable human elements become more valuable:

- Trust relationships: privacy, integrity, confidentiality, consistency, sovereignty

- Cultural coherence and meaning-making: integrating information into narratives and communities in a way that makes sense given human cultural context, not just token probabilities

- Sincerity, authenticity, and effort: where something has value precisely because it required effort or real sentiment

- Regulatory and legal positioning: operating in complex domains (healthcare, finance, critical infrastructure, etc.) - areas with non-artificial friction

- There are also sources of artificial friction, which we are bearish on. EU governance is a great example of this.

- Taste and aesthetic judgment: determining what is good or appropriate, not merely plausible

Verification, Deterministic Execution, Safety, and Provability

Democratized AI capabilities expand the attack surface, creating demand for:

- Verifiable interpretability: why a model suggested what it did, along with its reasoning- - potentially in a formally verifiable way. There are some promising approaches to Domain Specific Languages (DSLs) here as a first step.

- Deterministic execution rails: transaction layers with guardrails and auditability, along with an index of where the new attack surface area lies (see the Claude Code jailbreak here and Anthropic’s threat intel report here). Hallucinations are a feature of LLMs, even when we turn the temperature down, but this more pertains to having a referential kernel of truth.

- Potentially trust marketplaces: systems for verified attention, content, and decisions, both synthetically and organically made. This could be extended to prediction markets, as an eval for how well a model is able to build a cohesive version of the present to reason about the future.

- Human-in/on-the-loop verification: incentivized human oversight for edge cases

Interoperability

Assuming the incumbent model providers and frameworks are going to own the orchestration and meta-cognitive layers (potentially through self-referential orchestration), models lose their fungibility.

- Strength maximization: intelligently routing across providers given the quality and quantity of intelligence required for the task at hand. This will more likely be a feature on a winning platform (e.g., perhaps OpenRouter), as it’s directly correlated to cost, rather than a standalone company.

- Shared memory and context: As different models employ different tricks (hot vs. cold context, native tool calls, selective memories, quantization based on congestion), each will be working with a different world view for what could essentially be the same task. Losing fungibility means vendor lock-in.

- Handoff and black boxes: Internal reasoning, system prompts, and parameters are likely not going to be equivalent in a complex, long-running, multi-agent workflow. So having the same introspection and safety guarantees between agentic steps, as well as the models, is crucial for opening up higher-risk use cases, such as finance, arbitration, healthcare, etc.

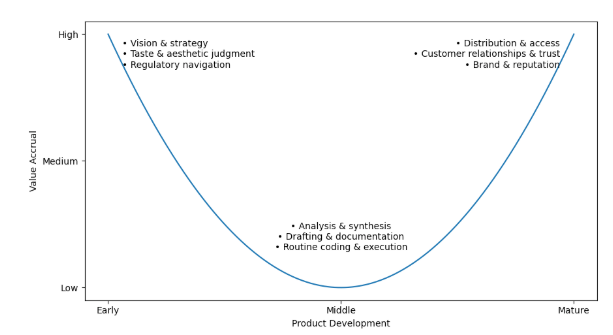

7. The Commodotized Middle

The generational capex build out, we know, captures value today, as that’s where the friction is. And if the above holds, the friction quickly moves past the middle of execution and analysis, to the edges described above -- and so follows the “smiling curve,” an economic theory that suggests that value accrues at the upstream design of a product, and downstream, during distribution, whereas the middle manufacturing stage quickly commoditizes.

Abundant intelligence extends this pattern to cognitive work:

High Value Upstream:

- Vision, strategy, and goal-setting

- Taste and aesthetic judgment

- Regulatory navigation

- Relationship and trust-building

Commoditized Middle:

- Analysis and synthesis

- Drafting and documentation

- Standard negotiations

- Routine coding and process execution

High Value Downstream:

- Distribution and access

- Customer relationships and trust

- Physical world presence

- Brand and reputation

Entities, whether autonomous or not, whose moat is customers’ trust and owning the relationship will see their advantages strengthen as execution commoditizes.

8. Consumer Surplus and Distribution

Whether it’s electrification or industrialization, the historical precedent for general-purpose technologies is that consumers capture the majority of surplus, while a thin layer of firms at key chokepoints capture outsized profits.

Abundant intelligence extends this pattern dramatically. The prospect is that:

- World-class analytical, legal, medical, educational, and advisory capability available on demand, anywhere, any time

- And by extension, physical labour, from laying tiles to surgery, which never tires

- Productivity gains flowing to individuals

- Deflationary pressure starts on cognitive services, and flows to any repeatable task

Concentration risks remain real, particularly around:

- Agent platform control over demand allocation

- Data lock-in, network effects, and switching costs

- Potential for surveillance capitalism dynamics

The optimistic equilibrium is that consumers capture most welfare gains, AI utilities earn regulated returns, agent platforms face competitive and regulatory guardrails, and vertical firms compete on service, taste, and trust.

9. The Role of Cryptographic Infrastructure

So, with all of this, we arrive at the heart of the argument. We must acknowledge that AI, as it exists today, has trust issues. These issues are, and will be even more so, a great source of friction. Friction has a direct throughline to value. And crypto - cryptography, incentives, and consensus mechanisms - are the rails on which trust infrastructure is built. Most current crypto x AI projects use the AI meta, and apply token mechanics to commodity AI capabilities, without exploring and addressing the fundamental needs. The substantive opportunity that gets us excited lies in trust infrastructure for autonomous cognition. While it might seem that every force is pushing us towards new levels of scale, we’re excited about things which thrive precisely because they will remain scarce in a world of abundance.

Mathematics is the language of the universe, and mathematics prefers elegant and simple solutions. How the universe chooses to express intelligence is a most curious and elegant puzzle. Cryptographic infrastructure, when combined with incentive mechanisms and consensus protocols, is a most elegant and simple tool to verify scarcity. And so in our opinion, it follows that crypto has an existential role to play -not only in the age of synthetic intelligence, but in the coming era of abundance.

Conclusion

So, to express this essay in 5 bullet points, whether we have successfully “nerd sniped” you, or whether you skimmed to the bottom hoping to find the tl;dr, here it is

tl;dr

- Architectural Transition: New approaches promise 100-1,000+x efficiency gains, potentially stranding infrastructure investments predicated on transformer scaling.

- Winner Migration: Value moves across phases: infrastructure → platforms → verticals → trust. Current compute leaders may not become platform dominants.

- Scarcity Shift: When cognition is cheap, verification, authenticity, alignment, and physical world integration become the bottlenecks.

- Form Factor Evolution: Chat and copilot interfaces are transitional. Ambient, agentic systems represent the destination (maybe).

- Trust as Infrastructure: Cryptographic verification, not token speculation, addresses the fundamental challenge of autonomous cognition at scale.

The firms and investors positioned for this transition will recognize that the question is not whether AI transforms the economy - that outcome appears certain. The question is which layers of the emerging stack capture durable value, and how to position before the market fully prices the paradigm shift,- all while maintaining our humanity and cultural wealth.

Acknowledgements

Immense gratitude to the following for their review

- Zeynep Tufekci, Princeton

- Davide Crapis, Ethereum Foundation

- Sriram Vishwanath, Georgia Institute of Technology, Mitre

- Pondering Durian, Delphi Research

- Tommy Shaughnessy, Delphi

- Naman Kapasi, Fractal

- Aneel Chima, Cognitive Scientific, Stanford

- Rach Pradhan, Menlo Research

- Shay Boloor, Futurum Group

Footnote

[1] Note: this statement may not be true by the time we publish.

Disclaimer

The information herein is for general information purposes only and does not, and is not intended to, constitute investment advice and should not be used in the evaluation of any investment decision. Such information should not be relied upon for accounting, legal, tax, business, investment, or other relevant advice. You should consult your own advisers, including your own counsel, for accounting, legal, tax, business, investment, or other relevant advice, including with respect to anything discussed herein.

This post reflects the current opinions of the author(s) and is not made on behalf of Hack VC or its affiliates, including any funds managed by Hack VC, and does not necessarily reflect the opinions of Hack VC, its affiliates, including its general partner affiliates, or any other individuals associated with Hack VC. Certain information contained herein has been obtained from published sources and/or prepared by third parties and in certain cases has not been updated through the date hereof. While such sources are believed to be reliable, neither Hack VC, its affiliates, including its general partner affiliates, or any other individuals associated with Hack VC are making representations as to their accuracy or completeness, and they should not be relied on as such or be the basis for an accounting, legal, tax, business, investment, or other decision. The information herein does not purport to be complete and is subject to change and Hack VC does not have any obligation to update such information or make any notification if such information becomes inaccurate.

Past performance is not necessarily indicative of future results. Any forward-looking statements made herein are based on certain assumptions and analyses made by the author(s) in light of their experience and perception of historical trends, current conditions, and expected future developments, as well as other factors they believe are appropriate under the circumstances. Such statements are not guarantees of future performance and are subject to certain risks, uncertainties, and assumptions that are difficult to predict.